Refined deepfakes threaten reality

8 December 2020

Opinion: The image doctored by the Chinese Foreign Ministry breaks with our agreed commitment to truth, writes Neal Curtis. And it's only the tip of the iceberg.

News of Australian Prime Minister Scott Morrison’s expression of outrage at the faked image of an Australian soldier holding a bloodied knife to the neck of an Afghan child - which appeared on the official Twitter account of the Chinese Foreign Ministry raises a number of questions about the ongoing diplomatic spat between the two countries.

In retaliation for the Australian government raising questions about the Chinese government’s treatment of Uighur Muslims, China replied by calling attention to the report presenting evidence of unlawful killings of Afghan people by Australian forces.

While national governments pressing each other on matters of human rights is not new, the nub of this particular case is the additional scandal generated by the use of a fabricated image and the use of social media to disseminate it.

The scandal stems from the very particular but contradictory power of images. In the first instance, there is a social contract that makes images the cornerstone of empirical evidence, supposedly providing a reliable record that an action or an event actually took place. In this regard, images have always been essential to scientific demonstration and proof. This image clearly breaks that contract.

At the same time, however, images also have a strange magical quality. Images can be spellbinding and captivating. They have an extraordinary capacity to persuade, and can even have that uncanny quality of seeming to possess a spirit, something that can be seen whenever we consider disposing of old photos and can’t quite bring ourselves to part with that one of grandma. Conversely, it is also why tearing up a photo of someone who has wronged us can be so cathartic.

As humans, our eyes and ears have been key to our evolution. Where do we go when we can’t believe what they tell us?

So, the image of the Australian soldier breaks with our agreed commitment to truth while at the same time retaining its power to captivate and disturb. What should concern us here, though, is that this is nothing new and it is only the tip of the iceberg of what is to come. Creating false images of the enemy or an opponent is as old as propaganda itself. One of the most interesting examples of this history was when US forgers were employed during World War II to create fake Nazi stamps. These looked just like the originals, only the face of Hitler was made to look more skull-like and hence present him as an avatar of death. These stamps were attached to actual letters and dropped from US planes as they flew over Germany.

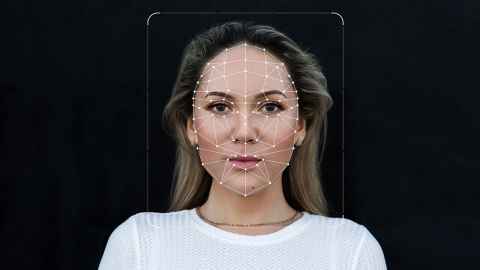

However, why this is only the tip of the iceberg, and what we should really be worried about is a new type of synthetic media known as ‘deepfakes’. These are most often moving images generated by artificial intelligence to simulate reality. The French sociologist, Jean Baudrillard, once said that to fake an illness is to imitate being ill without actually showing symptoms, while a simulation is to demonstrate symptoms while not actually being ill. This is the effect of deepfakes. To all intents and purposes they appear ‘real’.

You can see a number of rather crude versions - sometimes referred to as ‘cheapfakes’ - that have been used for entertainment or educational purposes on YouTube, but they will very soon become increasingly refined and the technology increasingly ubiquitous, and this has massive implications for our future conception of reality, especially in our ‘post-truth’ age where disinformation and ‘fake news’ are rampant on social media.

Let me be plain, though. This image used by the Chinese government to draw attention to unlawful killings by Australian soldiers in Afghanistan shows that what we understand by reality is already heavily mediated such that this sort of information is usually ‘edited out’ and does not inform our perception of the world. We might say, then, that our perception of reality is already false to some extent. We might even go further and say that if the reality of these killings is intentionally withheld from us then our perception of reality is already fake, if by this term we understand something pretending to be what it is not (i.e. that military intervention is always virtuous and humane).

If our perception of reality is not always entirely reliable, deepfakes, especially in video form, will introduce new levels of unreality that we are in no way prepared for. What will happen when completely fabricated yet entirely ‘real’ videos start to appear of politicians doing or saying something they haven’t? Or individuals find themselves subject to a new form of deepfake trolling, such as the current trend in non-consensual porn? Not only can this destroy reputations, it could easily lead to war, and will clearly be a new political tool for bad actors across the globe.

While American composer and music professor David Cope has for many years been writing algorithms that can listen to a composer’s music and create a new and original piece in the style of Beethoven, and Microsoft has created a machine that learned the brush techniques and compositional style of Rembrandt to create a ‘new’ Rembrandt, one that is both a copy and an original, a fake and genuine, more prosaic synthetic media produced by artificial intelligence threatens to completely undermine what we think or believe to be real.

So, while the Chinese fake might undermine diplomatic protocols and the rules of international relations, if we as citizens do not make very clear demands for changes in the regulation, use and dissemination of information, very soon we will not know what is up or down, fact or fiction, real or simulation. As humans, our eyes and ears have been key to our evolution. Where do we go when we can’t believe what they tell us?

Dr Neal Curtis is Associate Professor of Media and Communication in the Faculty of Arts.

This article reflects the opinion of the author and not necessarily the views of the University of Auckland.

Used with permission from Newsroom Refined deepfakes threaten reality 8 December 2020.

Media queries

Alison Sims | Research Communications Editor

DDI 09 923 4953

Mob 021 249 0089

Email alison.sims@auckland.ac.nz